|

Kohonen Neural Networks - the foundations for modern Artificial Intelligent Systems (AI) and Machine Learning (ML)

| Note for Historical Reference |

|

Back in 1999 (as a young Software Developer) I wrote one of the very first online demonstrations of API driven server based Kohonen Neural Networks that were used to process natural language speech input from users and determine what they wanted to do and then trigger various ongoing activities. Basically a client application called into a data centre hosted server via an API and requested the best responsise to give. The AI Network was hosted on a server in a data centre due to its size and its ability to continually adapt based on a variety of inputs from multiple users.

This eventually formed the core software engine of my 2001 PhD research paper called: The Virtual Bodies Project which set out to build a new HCI (Human Computer Interface) driven by Artificial Intelligence to allow disabled people to interact with a computer and their environment without needing to know or need to be able to operate the computer.

Now around 20 years later this is finally becoming common place ideas and concepts through devices like Google Home, Alexa and siri however at the time this was ground breaking brand new research in this space and how the internet could be used.

Note: The text that follows this section is as written and published back in 1999 to explain the first network created - it has not been updated, so some references maybe out of date! Via the link you can download the high-level summary paper behind the Virtual Bodies Project as published in 2001.

|

| What is a Kohonen Neural Network? |

|

The Kohonen Neural Network is a development of the "percepton" network developed by Rosenblatt in 1962. Both networks make use of artificial neurons which are electrical simulations of biological ones - the core component of a brain. These artificial neurons are mathematical abstractions and aim to emulate the functionality of the neurons through computational code, this artificial abstraction is often termed neuro-computing, this term is used since neuro-computing is fundamentally different to digital computing.

Neurons are what makes a brain a much more powerful processor than a computer, this is because by careful organisation of the neurons, the brain can operate much more efficiently than the most powerful of today s computers. A neuron on its own is an incredibly simple object. It is made up of an input, and operator and an output in its basic form.

|

| How does the Virtual Bodies Project use it? |

|

The Virtual Bodies Project will create and make maximum use of an Artificial Neural Network (ANN) that is capable of recognising given textual input sequences. These input sequences consist of sequences of letters making up familiar words. In its most basic form an example input sequence could be h-e-l-l-o where the actual word is hello, made up from the letters: h, e, l, l and o. The neural network should then recognise this input sequence, if the sequence was contained in the training data for the network and produce a response to that input such as to the input Hello the network could respond with Hello to you. The neural network should, if properly trained be flexible about noise in the input sequence so that Hexxo would also be recognised as Hello.

The Virtual Bodies Project will then take this new Artificial Neural Network (ANN) as its core logic engine and running this server side in a way that is capable of recognising given input sequences and then performing subsequent actions. These input sequences consist of sequences of letters making up familiar words an the network should then attempt to recognise this input sequence, and subsequently produce a response to that input such as to the input HELLO the Virtual Bodies Project could use the recognition generated by the network to decide to best respond with Hello to you. The neural network should, if properly trained be flexible about noise in the input sequence so that Hexxo would also be recognised as Hello.

The Virtual Bodies Project developed on a series of servers in a data centre and offered an API driven Kohonen Neural Network all created in the Java programming language, it was data-centre hosted to alow for scaling of processor resources and memory.

|

| How was the network built and how does the "brain" operate? |

|

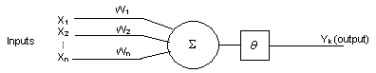

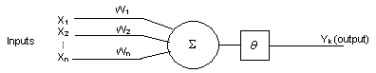

A neuron on its own is an incredibly simple object. It is made up of an input, and operator and an output in its basic form. This operator is a weighting system that given significant input, the neuron will fire or give an output. A biological neuron is made up of dendrites (inputs), a cell body (the operator) and axons (outputs) and each neuron tends to be connected to 10,000s of others

An artificial neuron is made up of synapses (the equivalent of the dendrites and axons) and an adder (equivalent to the cell body).

Unlike biological neurons, artificial neurons tend to only be connected to a few other neurons, and also have weighing factors on the synapses to enable the neuron to operate correctly. These artificial neurons are what makes neuro-computing an artificial abstraction of the biological world and are attempts at using mathematics to simulate and mimic the biological world. Suprisingly Artificial Neurons and actually operate a lot faster than their biological counterparts through the use of existing logical gates.

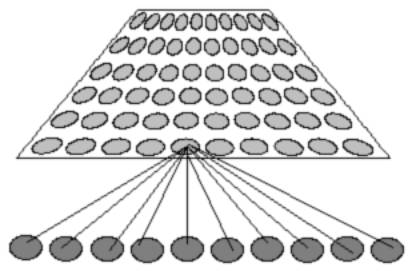

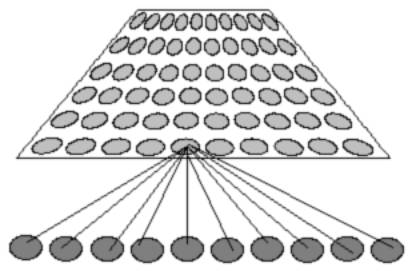

The Kohonen Network model for Neural Networks is multiple lattice like layers of neurons, all connected to the same input to the system. With all inputs going into every neuron (this is a very basic single layer over-view diagram).

Weightings are then applied to every input line between a neuron and the input pattern - so in the diagram above, the weights on the input lines to the neuron might be 4, 5, 8, 1, 4, 3,7, 2, 6 and 9. Every neuron has different weights on the input lines between the input sequence and the neuron itself. It is these weights that are the key to the operation of a Kohonen network.

When a network has been fully trained (training is explained subsequently), for any given input sequence to the network all the Neurons calculate their value, which is produced by this formula:

Output = Sum (input(i) - weight(i))2

Where the output to the Neuron is the sum of all its inputs, minus the weight on that input line, squared. The closer to the output from the Neuron is to 0, then the better the match for the input pattern.

|

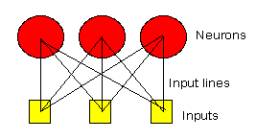

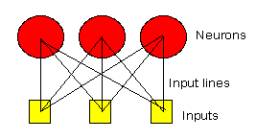

A trivial example of this is for a network containing 3 neurons and 3 inputs - fully interconnected, meaning there are nine input lines, the network might be assembled as shown.

The weights to the neurons might, after training end up as:

For Neuron 1: From Input 1 - 4, input 2 - 7, Input 3 - 6.

For Neuron 2: From Input 1 - 2, input 2 - 5, Input 3 - 1.

For Neuron 3: From Input 1 - 8, input 2 - 3, Input 3 - 2.

Then, when an input sequence is presented to the network the formula is used to calculate the outputs.

|

To train the network, the input sequences required to be known by the network are passed to the Kohonen map many, many times - each time though, the weights on the winning neuron and those surrounding the neuron are adjusted by the formula:

NewWeight = OldWeight + (gain x (input - OldWeight)

This causes the weights on the neuron to over time adjust and become close to, if not the same as the input sequence. The Gain term is a numerical value, which decreases over time (as in training iterations) which reduces the steps of convergence towards the answer - as in, for the first few cycles the weights would change dramatically for the neurons, after a few cycles the weight changes become smaller so the change over the network is reduced. Also the sphere of influence of the winning Neuron over its surrounding neighbours is also reduced. This neighbourhood of neurons allows noisy patters to be recognised by the system, as although it will not match up exactly with the winning neuron, it might match up with one of its surrounding neighbours of neurons. This also causes input sequences with similar characteristics to each other to begin to collect together in zones on the network.

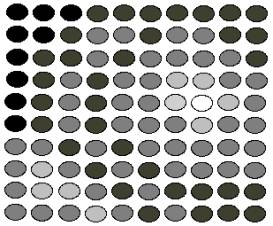

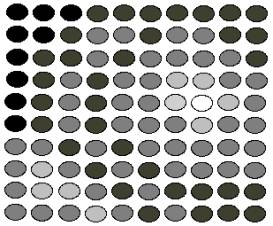

If the example above, is then expanded to a much grander scale say a lattice of neurons 10 by 10. Then neighbourhoods begin to form around the neurons due to the way training works. This neighbourhood of neurons all have similar outputs to the winning neuron although not as close to 0 as the winning neuron. If a graphical end is then placed over this network, the neurons can be plotted based on their output values - by assigning a scaled colour to these outputs, the neighbourhoods can be seen:

Here, the winning neuron and those with similar outputs have a white or near white colour.

By then applying Memory techniques and associated memory, the network can be completed. This memory is based on the neighbourhood surrounding the winning neuron - the smaller the overlap between zones the easier it is to divide the network up into sections. Each section dedicated to a particular input sequence.

The way this network is used in the context of Sequence Recognition, Sequence Reproduction and Sequence Association is by firstly training the network to recognise a series of input sequences. Once trained (training is determined to be completed when the changes between the winning neurons for all the input sequences are small) the neighbourhoods for each sequence can be established which is then mapped into memory locations for that sequence. Then to use, a sequence is applied to the network, this then replied with a winning neuron, which in turn is looked up using the memory for the system from which a reply is created - this can be either a 1/0 for Sequence Recognition - a 1 if the neuron matches up to a valid memory location, for Sequence Reproduction, the memory would output the correct input sequence - such as Hello for Hello or Heffo. And, for Sequence Association, the memory would reply with Goodbye for Hello as the word hello has been associated with goodbye.

Through a combination of different learning techniques, the neural network can be taught to function as an an artificial intelligence brain where it learns itself and adapts to continually find the correct responses for new input sequences:

- Supervised learning is where the neural network is taught using predetermined ranges of inputs and outputs. If the neural network then the weights are adjusted each iteration to teach the neuron to produce the right results for given inputs from a series of predefined inputs and outputs. This is based on the weights being given an initial value (a trial) which is then adjusted to produce the correct results.

- Unsupervised learning is where the network does not know the right answer for any given problem or what its weights should be. Through subsequent further iterations trends can be discovered and these can be used to teach the network the right answers to the problems.

- Reinforcement Learning is the method that relies on trial and error. The weights between neurons that fire together more frequently are strengthened, and the weights on the neurons less used connections are reduced. This allows the dominant connections to take over and squash the potential firing of incorrect results from the system through return feedback from one node into the others.

The Virtual Bodies Project makes use of both Supervised and Reinforcement Learning techniques to be initally trained with a series of pre-programmed responses which then dynamically changed and extended through user interactions and gained additional capabilities and result sets within its network.

|

| Testing the Network Brain for the Virtual Bodies Project |

|

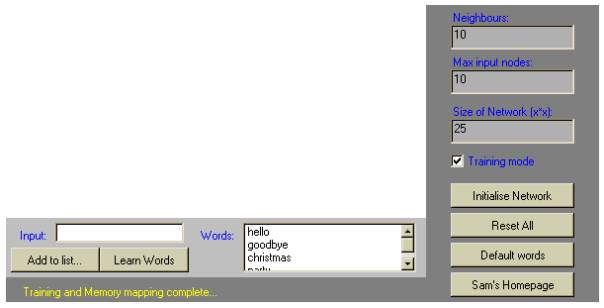

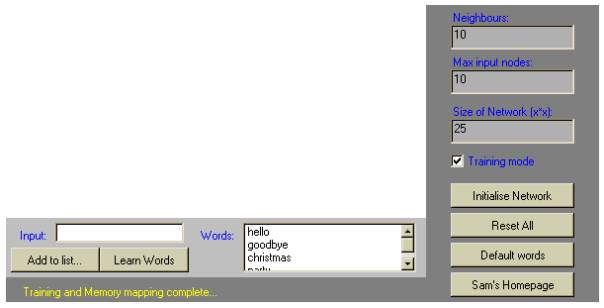

To test the Artificial Intelligence Brain - the new neural network, a simple "demonstration" application was developed prior to the main service and systems being built. With this brain a variety of training sets were taught to the network and the results compared with the desired outputs. Basically through teaching you test that the network for a given input (which can be a fuzzy) the network needs to respond with correctly associated output for the sequence.

For the Sequence Association, which is the most complex output sequence from a neural network and the most important capability needed for the Virtual Bodies Project, the following were given as inputs to the neural network:

Hello -> How are you?

Goodbye -> See you later.

Christmas -> jingle Bells.

Millennium -> Big party coming soon!

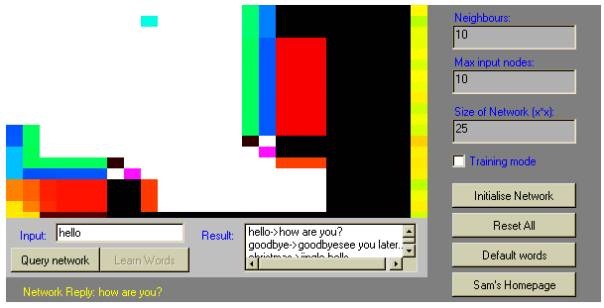

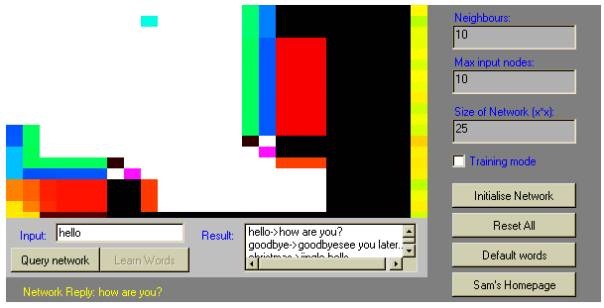

After the training is complete, the application displays its nueural network in a visual form: (Note: Training the network with base responses took roughly 5000 cycles)

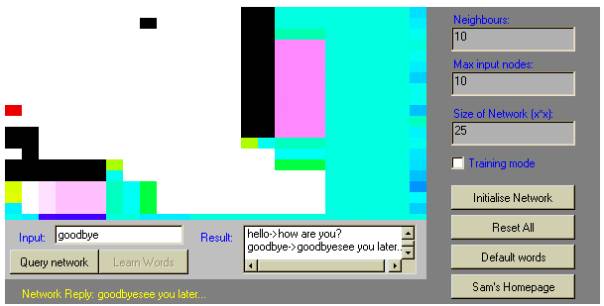

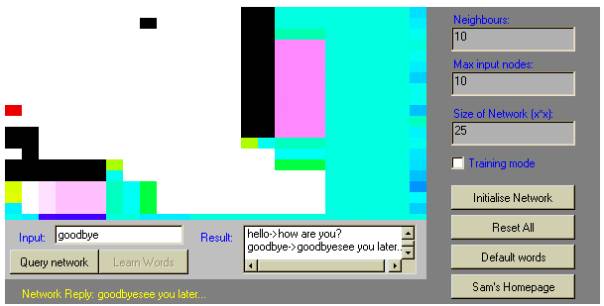

Providing inputs to the network then tests the establishement of this neual network:

Entering Hello produces as an output How are you?.

Entering Goodbye produces See you later.

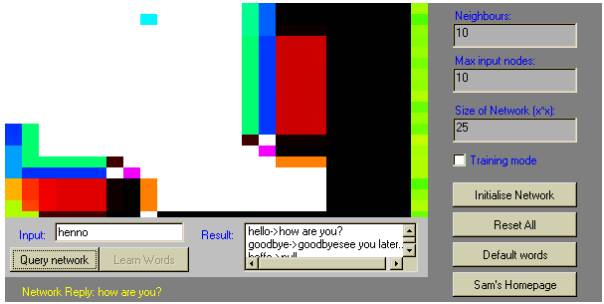

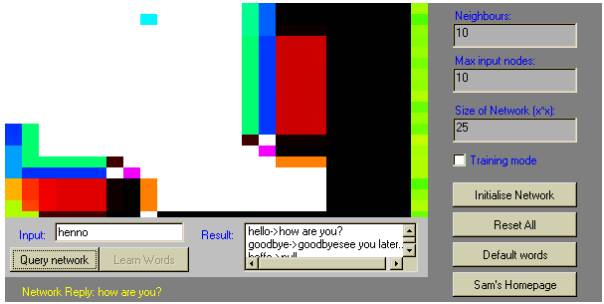

Then testing noisy inputs to the system:

Henno produces How are you?

As can be seen from these examples, the Neural Network could be said to operate correctly for the test data. This test was using a 25 x 25 network with an initial neighbourhood of 10.

Subsequently the network was greatly expanded and extended, to support hundreds of possible inputs and responses and dynamically grow new capabilities over time.

NOTE: This page is referenced in the Artificial Intelligence course from Northampton University - the course notes make great onwards reading and are available right about here.

|

| View the source code |

|

Now, remember this was written 20 years ago...! The source code is suprisingly simple as a result yet highly powerful. It totals just 34KB to create an artificial neural network brain...

You can download it if you wish from github - please remember and respect copyright, yes, it might be old code but copyright still applies.

© (C) 1999 Sam Hill. All rights reserved.

Unfortunately, the full source code behind the Virtual Bodies Project implementation will not be released nor made available in any form.

|

|